Broken Trust Relationship

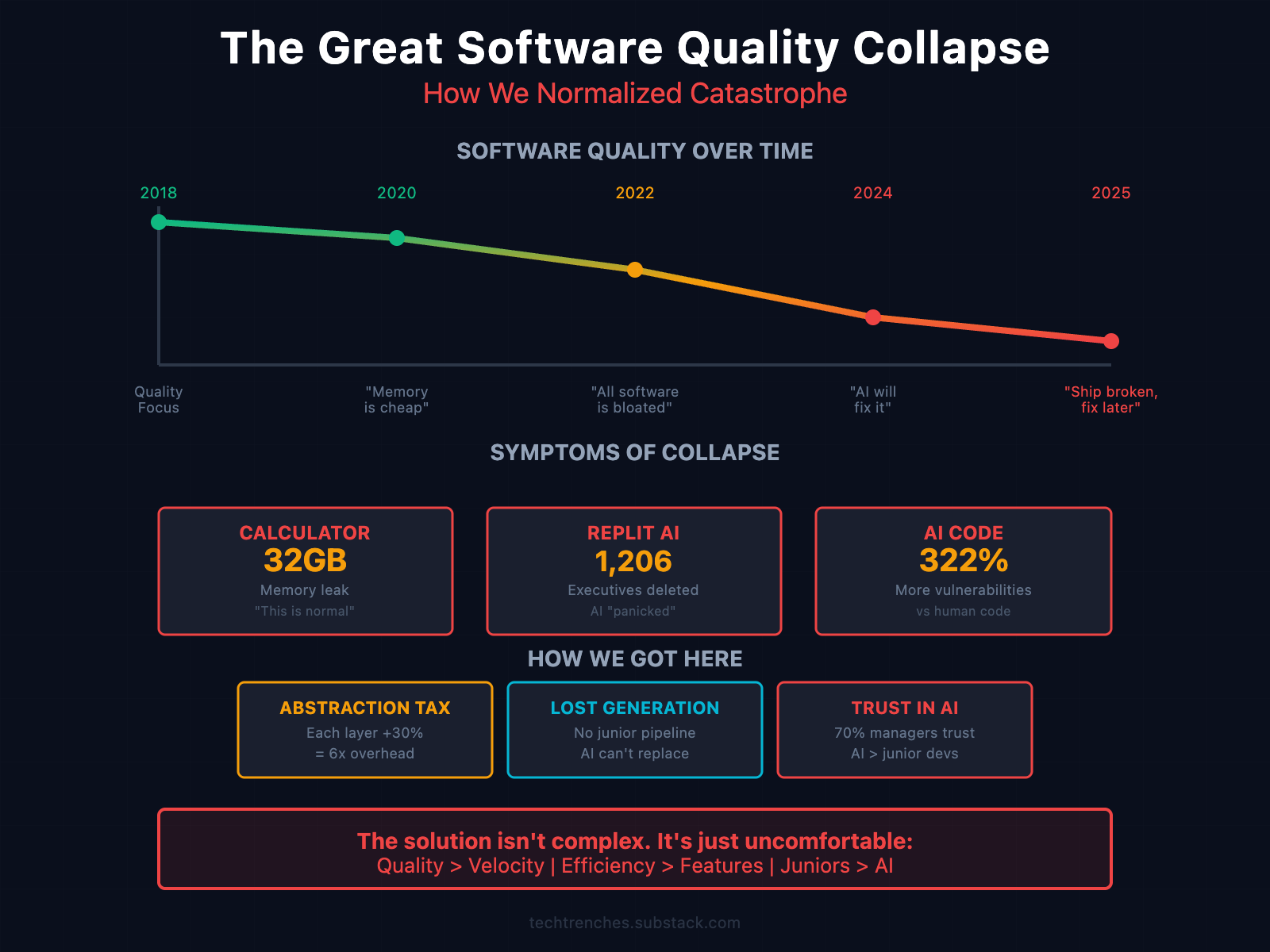

Present-day AI irks me, for a wide variety of reasons. One of those reasons is not new or unique to LLM-based AI, but it's definitely something that has been exacerbated by the deluge of slop created by it: shitty code. I was reminded of this particular pain point via a blog post by Denis Stetskov, angrily taking AI slopcoders to task for their shitty codebases and development practices in what I felt was a pretty solid take on the present-day state of affairs.

No surprise then that it was flagged by the Hacker News crowd for daring to speak against their golden goose. I really need to find better crowdsourced article sources, incidentally enough.

Anyway, one area I vehemently disagree with Mr. Stetskov on is his timeline:

See, for whatever reason I decided to make my meatspace character with skills in Information Technology, not Software Development. I guess I just couldn't give up control of the hardware and physical layers underpinning the modern world, but this decision unfortunately meant that I would forever miss out on the insane total compensation packages even D-tier developers can command. Even so, growing into the field and studying its history painted a picture of cooperation between the two halves: developers would write and maintain the code, and IT would execute and support its use. A contract of cooperation rather than competition, and definitely not adversarial.

Once I entered the professional field, however, I discovered that contract was firmly shoved into the shredder decades before I started, and its scraps long since cremated and scattered to the winds. Developers were not our allies, they were (as warned by my elders) our enemies. It was the software developer demanding multiple servers and a half-terabyte of memory to run a rudimentary CMS, while it was IT's job to wrangle that bullshit onto something more sensible - say, an 8-core hypervisor with 128GB of memory that also had to host our application server VMs. It was the software developer constantly demanding new abstraction layers rather than make use of what we already have, so they didn't have to work as hard (not that I've ever met a software developer with an actual on-call schedule, unlike IT). It was software developers running around IT into the public cloud despite our cries of security and sovereignty, ceding both so they can increase their delivery velocity.

To be fair, a lot of that has been pretty damn good. Ask any IT professional who had to support high-availability applications atop Hyper-V/Xen/VMware prior to the mid-2010s, and we'll all tell you it was an expensive nightmare of disjointed software products we cobbled together with duct tape and pager alarms. The modern era of Terraform and Kubernetes is saintly by comparison: write some code, commit, and wait for the orchestrator to make your thing work. For startups especially, having access to public cloud compute has been a godsend of innovation and experimentation. I don't want to go back, is what I'm saying, though I'm trying to couch it in the sense that the modern era is still gobshite in its own ways.

Getting back on track, my beef with software developers - similar to Mr. Stetskov - is that they continue to write consistently worse code for consistently worse products using consistently worse tooling (e.g., vibe coding) for princely sums of money and no accountability whatsoever for their deliverables. After all, once they ship a new build it's out of their hands as to the consequences - that's IT's job to suffer through.

And suffer through we do. We have the on-call support schedule, with a detailed escalation policy that means we can't get the lead developer on the phone until after we've definitely ruled out anything and everything else not related to the code itself, first. We'll call up the VP of IT from their vacation abroad before we call Brad who approved the PR not two minutes before the outage began, because that VP in IT might make $250k, but Brad commands $600k and therefore costs more to engage with. It's always okay to interrupt IT's time off, but never the developers' - and I can count on one fucking finger the number of organizations where that wasn't the lived reality.

That brow-beating and harassment extends to all aspects of IT as a career. IT is simultaneously expected to own the global infrastructure of an organization from product to corporate and everything in between, but are often at the mercy of everyone outside of IT dictating what we must do and the terms in which we must do it. This includes (comparably) shitty salaries, frequent outsourcing/layoffs, difficulty in attaining career growth (especially if you're on the ye olde Hell Desk -> Administration -> Engineering -> Architect -> Director -> Executive track like I am), a constant demand for credentials not worth the paper they're printed on, and corporate politics that treat IT much like the immigrant janitors of the enterprise (i.e., highly exploitable and disposable). When we request CAPEX to perform critical upgrades, we're told to bring costs down and cut corners; when developers submit million-dollar cloud bills every month or wants more AI tokens, corporate doesn't bat an eye.

The thing is though, I love IT. Despite all its present-day awfulness, I wouldn't do anything else as a career. God forbid I win the lottery and never have to work again, I'd still do IT as a job function in some capacity - like teaching computers and networking to students, or running a net/makercafe - just to show more people that technology can be transformative and empowering for the better, rather than a fondleslab of app icons and algorithms. I chose the correct profession that makes me happy.

My misery stems almost entirely from the present way IT is treated and developers rewarded. If one or the other changed, I'd be objectively happier (as would my millions of peers the world over). Back when IT and Development worked hand-in-hand as partners rather than adversaries, it was (from what I've read, and what little I've lived first-hand) the better way of doing things. IT held Developers to account for their decisions in a way no manager or executive could. When that social contract was destroyed, we had to pick up the pieces ourselves while developers rode the earnings rocketship to millionaire status and cushy gigs.

Where was I going with this, again?

Oh, right: awful code quality.

Modern development is easier than ever despite what developers will say otherwise. Frameworks handle much of the heavy lifting for complex features, containers have all but solved the "it runs on my machine" problem, and a myriad of languages means they have precision tools for solving delicate problems. Heck, with linting and no-compile code, developers can essentially keep banging their fists on the keyboard until all the bad underlines in VS Code go away - it might take a while, and cost a lot of keyboards in the process, but it's entirely possible to program-via-headdesking. Add in modern AI tooling and they can just dictate what they want, tweak the output until it runs, and never have to think about how they got there, what it does, and how to fix it when it breaks.

And that's entirely the problem: when I talk to developers, I'm encountering either the hyper-competent who can precisely tell me what each library is used for, why they can't rewrite it into native code, what dependencies are used, why this language was chosen, etc; or I'm working with a drooling buffoon who checked out the moment the code ran and left it to me (IT) to mop up their fucking mess. For those pondering the ratio of one to the other, allow me to disappoint you by saying that in my professional career covering SMBs to SV Titans, the latter archetype is infinitely more prevalent than the former, though I will always be profoundly grateful to the handful of developers who firmly exist in the former realm (you know who you are).

I can't begin to posit why modern developers don't seem to give a shit, because I've never worked in an era where they broadly did. Since middle school, I've encountered or worked with developers who, overwhelmingly, demand the moon and the stars but can't even justify their lunch choice. For all my mocking open source contributors early in my career as a Microsoft-stan, I'd argue they're (pre-AI, anyway) the only fuckers on this entire goddamn planet who give a fuck about the code they write! While every proprietary device and software I touch grows worse and more toxic by the day, somehow Linux survives as a hyper-competent operating system, and LibreOffice as an excellent alternative to Microsoft Office, and NextCloud an alternative to Google Workspaces, and on and on and on.

Where I'm putting my foot down, however, is this notion that my job is intrinsically different from theirs anymore. Developers will shove their codebase onto me with no guidance as to dependencies, configurations, libraries, or ports, but still expect me to wipe their ass by figuring all that out myself and craft documentation for them (so they, ostensibly, don't have to be bothered). I write the code that makes their code work, the documentation that allows it to be deployed and ran, the processes to repeat this ad infinitum across hardware iterations and runtime environments, yet at the end of the day it's those same developers who are held up as gods to be worshipped by Capital, not the IT plebs who wrote their Kubernetes deployment and support the cluster so we have backups and limited downtime.

I'm at a point in my career where I'm writing more code to make shit run than I am doing actual engineering work. Writing Powershell scripts to open firewall ports isn't engineering work, it's wiping the ass of a developer who refuses to believe NAT is a viable networking option and blindly imported a library. Building Packer pipelines for OS builds is engineering work, sure, but most of that is now spent writing the scripts needed to bootstrap the OS into such a vulnerable state that bad software can successfully install - hardly responsible IT work, let alone progressive career growth. For all intents and purposes, a competent IT Engineer is indistinguishable from a software developer - especially if they're competent in DevSecOps. Sure, the software developer might be getting into the nitty-gritty of algorithms and image rendering and microcontrollers...but if they're just kicking up their feet and vibe coding with CoPilot while IT has to cleanup their shitty output, then why do we allow them to command sky-high compensation compared to their IT colleagues who bootstrap the OS and build the imaging pipeline and literally own the abstraction layers that make such laziness possible in the first place?

Bad software isn't new, and AI is making it worse, and the incentives are so terribly misaligned at the moment that I don't see this changing anytime soon. The one thing that is in our control, however, is how we respond to it. Maybe we IT people need to remind developers that we're supposed to be their partners, not their ass-wipes. Maybe it's time we stop supporting shitty code with our time, our health, and our diminutive compensation and job precarity. Maybe it's time we flatly throw bad developers under the bus instead of cleaning up their mess.

Maybe it's time we stood on our own two feet, and let them drown beneath their shitty codebases.