Sovereignty and AI

I feel pretty confident in saying that I was not entirely right or wrong with my stances on AI. While I appear to be vindicated in my position that these things cannot think or reason, that they cannot be trusted with decision-making due to hallucinations and reward-hacking, and that they consume inordinate amounts of resources in their creation and present operation, I'm also dead-wrong on my position that they cannot be useful or are little more than toys.

They're pattern matchers that operate on natural language. That's a game changer I neglected because I was solely focused on refuting boosters and bubble-pumpers instead of doing what an engineer does best: objectively evaluating technologies for use in solving problems.

Yet for all the posts about vibe coding with Claude for fun and profit, I'm also siding with the economics of current AI being completely untenable in any sort of longer horizon as it's currently billed. The simple reason is that major AI companies have to build the models, the infrastructure, and the tooling to justify already-insane $200/month subscription plans, which themselves operate at a steep loss by burning venture capital money. Their business goal is essentially entrapment via walled garden, building your corporate and product infrastructure on their tools, their models, their infrastructure, and making it as painful as possible to leave through forced-updates and ever-changing guardrails or APIs.

Except this plan doesn't seem to be working out thanks to tools like MCP and OpenRouter essentially allowing users to route specific prompts or entire workloads to whatever model might be cheapest and best suited to that specific task, orchestrating grandiose agent workflows across multiple vendors in a single stroke. While cool for now, Cursor is a prime example of why it won't stay this way for long. Too few major customers and an ARR that's far outstripped by the rate of expenditures on new models (which are of decreasing gains) means these companies look great if you're only looking at very specific metrics (like active user, token consumption, or ARR growth), but otherwise appear deathly ill if the entire financial picture is looked at more closely.

Let me be clear: I do not see OpenAI or Anthropic surviving as standalone entities in 2030 barring a profound revolution in technology that allows us to reach AGI (which they probably don't want to happen, lest they be lynched by billions of unemployed workers before they reach their doomsday bunkers). They're simply burning through too much capital, too quickly, with no ROI to justify their fundraising valuations other than hype. Microsoft will likely acquire one outright when the bubble pops, and Oracle or IBM the other.

AI, however? It's remarkably resilient at surviving hostile winters, and LLMs will be no exception. The difference is that it likely won't survive as a SaaS product, but as a local infrastructure tool unique to each company and built on standard tooling - like MCP.

The Sovereign will be rewarded

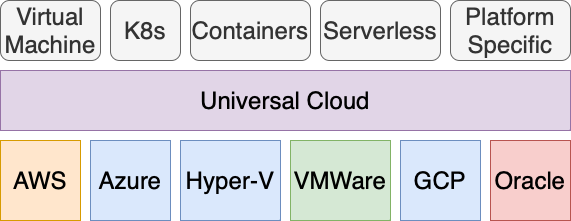

In a prior blog post, I pitched the idea that Big Tech companies aren't selling you a software product so much as trading your sovereignty for convenience. They hoard your data, they host your servers, they manage your DNS, and they sell you the tools to do it all on their platform exclusively. Despite my dream of a universal cloud, the industry was all too happy to settle on walled gardens for everybody, and sovereignty solely for the well-capitalized.

This concern has been proven justified given the United States' insanity and self-destructive behavior. The EU is actively seeking to move away from US technologies and companies in favor of homegrown solutions, an attitude that will certainly spread elsewhere as countries grapple with a multipolar world. This concern also extends to AI, in the sense that countries don't necessarily trust major players like the USA or China with their data as-is, never mind processing it in their domestic AI systems (e.g. DeepSeek, OpenAI, Anthropic). That being said, the appetite to thrash dwindling freshwater and electric supplies in training foundational models non-stop is a similar non-starter.

Thankfully, open weight models and their tooling solve this issue. Any company with a few grand to spare can grab a memory-heavy PC or Mac with an NPU/TPU/GPU or similar accelerator, fire up something like LM Studio, and have a basic AI service running locally within their own network in a matter of hours, tops. No expensive AWS bills, no per-seat licensing on a capped subscription service, and no figuring out convoluted token billing schemes. Sure, you'll have to do some heavy lifting in terms of system prompts, API security, and tooling integrations, but that's increasingly easy as the tooling matures; homelab enthusiasts like myself can already offer such services at home on the cheap, and you can bet there's a glut of startups seeking to make loading and running models locally in a secure way as easy as installing ESXi and pointing it to LDAP.

The added benefit of running these locally is data security: no having to fret if your vendor has integrations with OpenAI or Anthropic, no building private tunnels or managing exit nodes into their APIs, just pointing directly to your internal server on your private LAN and speaking natively with your various databases and knowledge repositories.

Organizations who retained their sovereignty are the best positioned to benefit from LLMs and their capabilities, and they don't even have to train a foundational model to do so.

Enhancement, not Displacement

Locally-hosted solutions have a multitude of added benefits for an organization: retention of institutional knowledge, customizations to enhance output or productivity, air gapped security capabilities, and the enhancement of existing personnel rather than displacement.

Let's say you're a cloud-native company who leans on Anthropic for your AI workloads. Sure, your talent is fairly replaceable thanks to common skillsets, but this also means you're effectively forcing your business into an Anthropic-built prison. The same goes for your AWS fleet of servers, or your OpenShift containers: workers may have standard skills and be readily replaceable, but every single problem must fit within the confines of your preferred vendor's decisions regarding their tooling, environments, product lines, and business goals. You're not your own company, you're a subsidiary of your vendors.

Rolling things in house, on the other hand, gives you flexibility. You don't have to work around the constantly shifting landscape of API pricesheets, subscription tiers, and forced model changes anymore. You can run the same model indefinitely if you like, ensuring processes are repeatable and consistent rather than risking a new model deleting your production database. If you don't need a 120-billion parameter model, you don't have to use it. If you don't mind latency, you can use less-powerful hardware for longer. The flexibility is the point, and it means you can focus more on solving business problems than problems created for your business by third-party vendors.

This next part is conjecture, but I wager you also won't have to worry as much about employee turnover, either. Workers with common skills are easily replaceable, which also means that they're likely to bolt if they find a better wage elsewhere; neither party has loyalty, because the problems for either are the same regardless of who does the work or cuts the paychecks. A locally-hosted AI deployment on sovereign infrastructure, though? That worker isn't likely to leave barring a significant dislike of their current employer or a significantly better offer elsewhere; likewise, employers are reluctant to axe someone who built and maintains their core infrastructure and has already netted them strong ROI. Sovereignty over your infrastructure shows commitment to the business and its workers.

All of this tackles a core grievance many workers (myself included) have with AI: in its present business model, it acts as a permanent displacement for workers rather than an enhancement of labor. The stated goals of these companies is to permanently remove workers outright and hoard money for companies and their stockholders, who are overwhelmingly billionaires. Using tools from companies like OpenAI or Anthropic explicitly supports this stated goal and harms workers, while running models and tools locally robs them of their concentration of power. I'd go so far as to say that any company serious about ensuring all of humanity reaps the benefits of AI should be doing everything in their power to run it locally and entirely offline without licensing or phoning home.

I'm still incredibly wary of LLMs in general and the current demand of capital to shove them into every nook and cranny possible, with complete disregard for safety and customer preferences. Even so, I would be foolish to ignore the demonstrable utility they have.

I just believe that the true AI revolution won't happen in a Microsoft bit barn, but in the hands - and datacenters or homelabs - of end users, one local deployment at a time.